So following on from my last post about trying to generate cartoon ducks using AI -- and accidentally producing something quite Warhol-ish, I decided to try and generate a new profile pic for myself on our intranet at work. A colleague of mine said that my current pic makes me look Mr Noodle from sesame street . No. You can't see the pic. But maybe my beard was a bit too unruly at the time, and maybe the wall behind me was a bit too bright and primary-colourish.

So could I use the same technique of Generative Adversarial Networks (GANs) to produce a new image of "me"?

Let's recap how these networks work with a little analogy:

"So Sir, can you describe to us the person who robbed you of your wallet?"

"Yes officer, he was male, early forties, caucasian, 5'9", short brown hair, glasses, a beard and moustache"

[sketch artist works furiously]

"Like this?"

"No, the glasses were thinner, wire-framed type"

[sketch artist draws a new drawing with different glasses]

"Like this?"

"Yeah... maybe smaller nose"

[sketch artist draw a new drawing with smaller nose]

We have two neural networks, one, the generator (the sketch artist) and a second one, the discriminator (me). The first one is creating new images and the second one is trying to critique them. If the critic can't tell the difference between a 'fake' and a 'real' image, then the generator (sketch artist) has learned how to produce good likenesses of the subject.

So first, I needed a whole load of real images for the generator to feed to the discriminator in amongst its 'fake' ones to see if it could tell the difference.

Luckily Apple iPhones already have some machine learning in them to identify and categorise people. So I can easily copy 300 pictures from the last 5 years of myself from the phone to my desktop computer for processing.

I then opened them all up in Preview and very quickly and roughly cropped them to just have my face in. I discarded those that I was wearing sunglasses, or at a very odd angle to the camera.

I then fed those images into the GAN from before. And out of the gaussian noise, started to emerge a somewhat recognisable me...

Further...

So there, are definitely some likenesses there, but still in most of them I look like some apparition from a horror film.

I realised, that the images all being slightly different crops and orientations was giving the GAN a hard time. Bearing in mind this is a fairly simple network and we are far from the state of the art.

So I realised that I could use another bit of machine learning to pre-process the images. I could use a computer vision library to detect my facial features and then rotate, shift and scale the image such that at least my eyes were in the same place in each photo.

If you want to know the full technical details and code on this, I've written a separate (subscribers only) post on Using OpenCV2 to Align Images for DCGAN .

But the end result was pretty cool... and quite spooky. So here is an animated gif showing a few of the input images:

Notice how my face is slightly different size, and eyes moving about in different locations? Compare that to the aligned images:

Pretty eerie, right? Those are the same images as above, but the pre-processing AI has calculated the location of my eyes, and transformed the image such that my eyes are in the same location in each image.

So lets feed that back into the GAN and see how we do...

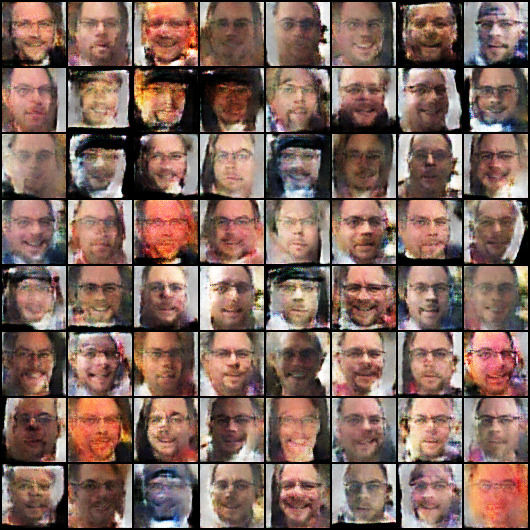

Not bad! There are certainly some ones there were it looks like I've been hit in the face with a shovel a few times... but actually overall it has done pretty well.

Again, what is pretty amazing is that none of these images ever existed . They are not distorted photos, but they are the result of a machine learning algorithm learning what my facial features look like and creating an entirely new and unique image of me.

So to recap, in the end there were three entirely separate machine learning / AI algorithms used in this process:

- The machine learning on my iPhone that had analysed all my photos and clustered the ones of the same same people together, such that I could easily find photos of me

- The OpenCV script used to pre-process the images that located my facial features in the photos and the rotated, scaled, and shifted the image such that my eyes were in the same place on each image

- The Generative Adversarial Network (GAN) that was used to generated entirely new images from the existing ones.

For coil subscribers, there is another animation showing the learning as it progressed.

Go Top